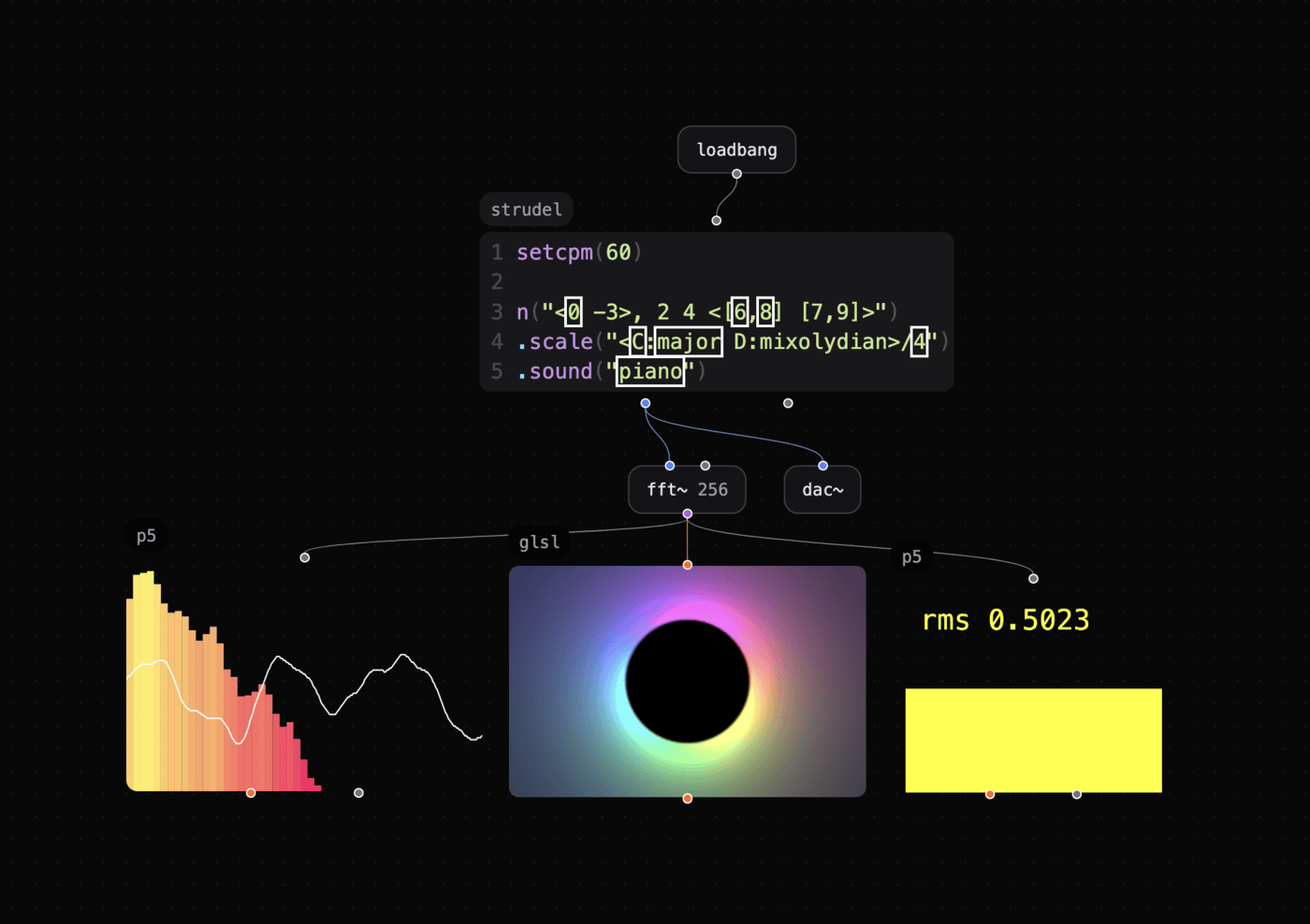

Audio Reactivity

✨ Try this patch with audio-reactive visuals!

The fft~ audio object gives you an array of frequency bins that you can use to create visualizations in your patch.

Getting Started

- Create a

fft~object with bin size (e.g.,fft~ 1024) - Connect the purple "analyzer" outlet to a visual object's inlet

Supported objects: glsl, swgl, and any objects using the JavaScript Runner like canvas.dom, hydra, and more.

Usage with GLSL

- Create a

sampler2DGLSL uniform inlet - Connect the purple "analyzer" outlet of

fft~to it - Try the

fft-freq.glandfft-waveform.glpresets

For waveform (time-domain) instead of frequency analysis, name the uniform exactly uniform sampler2D waveTexture;.

Usage with JavaScript Objects

Call the fft() function to get audio analysis data:

// Frequency spectrum

fft({ type: 'freq' })

// Waveform (default)

fft() // or fft({ type: 'wave' })

Important: Patchies does NOT use standard Hydra/P5.js audio APIs. Use fft() instead.

FFTAnalysis Properties

The fft() function returns an FFTAnalysis instance:

| Property/Method | Description |

|---|---|

fft().a |

Raw bins (Uint8Array) |

fft().f |

Normalized bins (Float32Array, 0-1) |

fft().rms |

RMS amplitude (float, 0-1). Uses time-domain signal for wave mode, spectral energy for freq mode |

fft().avg |

Average level (float) |

fft().centroid |

Spectral centroid (float) |

fft().getEnergy('bass') |

Energy in frequency range (0-1) |

Frequency ranges: bass, lowMid, mid, highMid, treble

Custom range: fft().getEnergy(40, 200)

Where to Call fft()

- p5: in your

drawfunction - canvas/canvas.dom: in

requestAnimationFramecallback - js: in

setIntervalorrequestAnimationFrame - hydra: inside arrow functions for dynamic parameters

// Hydra example

let a = () => fft().getEnergy("bass");

osc(10, 0, () => a() * 4).out()

Presets

fft.hydra- Hydra audio visualizationfft.p5,fft-sm.p5,rms.p5- P5.js visualizationsfft.canvas- Fast canvas visualization (usescanvas.dom)

Performance Tips

- Use

canvas.domorp5for instant FFT reactivity - Worker-based

canvashas slight delay but better video chaining performance

Converting Existing Code

From Hydra

- osc(10, 0, () => a.fft[0]*4)

+ osc(10, 0, () => fft().f[0]*4)

.out()

- Replace

a.fft[0]withfft().a[0](int 0-255) orfft().f[0](float 0-1) - Instead of

a.setBins(32), set bins infft~object:fft~ 32

From P5.js

| P5.js | Patchies |

|---|---|

p5.Amplitude |

fft().rms |

p5.FFT |

fft() |

fft.analyze() |

(not needed) |

fft.waveform() |

fft({ format: 'float' }).a |

fft.getEnergy('bass') |

fft().getEnergy('bass') |

fft.getCentroid() |

fft().centroid |

See Also

- JavaScript Runner - API reference

- Video Chaining - Connect visual objects

- Rendering Pipeline - Performance details

- env~ - envelope follower for audio loudness

- scope~ - audio oscilloscope

- meter~ - visual level meter